Theoretical Paper

- Computer Organization

- Data Structure

- Digital Electronics

- Object Oriented Programming

- Discrete Mathematics

- Graph Theory

- Operating Systems

- Software Engineering

- Computer Graphics

- Database Management System

- Operation Research

- Computer Networking

- Image Processing

- Internet Technologies

- Micro Processor

- E-Commerce & ERP

Practical Paper

Industrial Training

Von Neumann Computer

There are two fundamental architectures to access memory.

Von Neuman's Architecture John Von Neumann's: One shared memory for instructions (program) and data with one data bus and one address bus between processor and memory. Instructions and data have to be fetched in sequential order (known as the Von Neuman Bottleneck), limiting the operation bandwidth. Its design is simpler than that of the Harvard architecture. It is mostly used to interface to external memory.

The Von Neumann model

The modern microcomputer has roots going back to USA in the 1940’s. Of the many researchers, the Hungarian-born mathematician, John von Neumann (1903-57), is worthy of special mention. He developed a very basic model for computers which we are still using today.

John von Neumann (1903-57). Progenitor of the modern, electronic PC

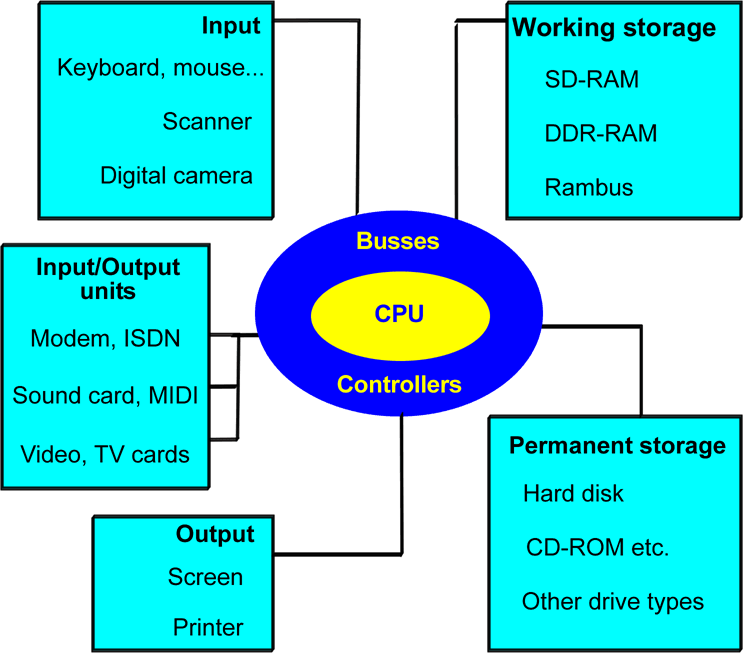

Von Neumann divided a computer’s hardware into 5 primary groups:

- CPU

- Input

- Output

- Working storage

- Permanent storage

This division provided the actual foundation for the modern PC, as von Neumann was the first person to construct a computer which had working storage (what we today call RAM). And the amazing thing is, his model is still completely applicable today. If we apply the von Neumann model to today’s PC, it looks like this:

The Von Neumann model in the year 2004.

Today we talk about multimedia PC’s, which are made up of a wealth of interesting components. Note here that modems, sound cards and video cards, etc. all function as both input and output units. But this doesn’t happen simultaneously, as the model might lead you to believe. At the basic level, the von Neumann model still applies today. All the components and terms shown in Fig. 11 are important to be aware of. The model generally helps in gaining a good understanding of the PC, so I recommend you study it.

Cray supercomputer, 1976

In April 2002 I read that the Japanese had developed the world’s fastest computer. It is a huge thing (the size of four tennis courts), which can execute 35.6 billion mathematical operations per second. That’s five times as many as the previous record holder, a supercomputer from IBM.

The report from Japan shocked the Americans, who considered themselves to be the leaders in the are of computer technology. While the American super computers are used for the development of new weapons systems, the Japanese one is to be used to simulate climate models.

The PC’s system components

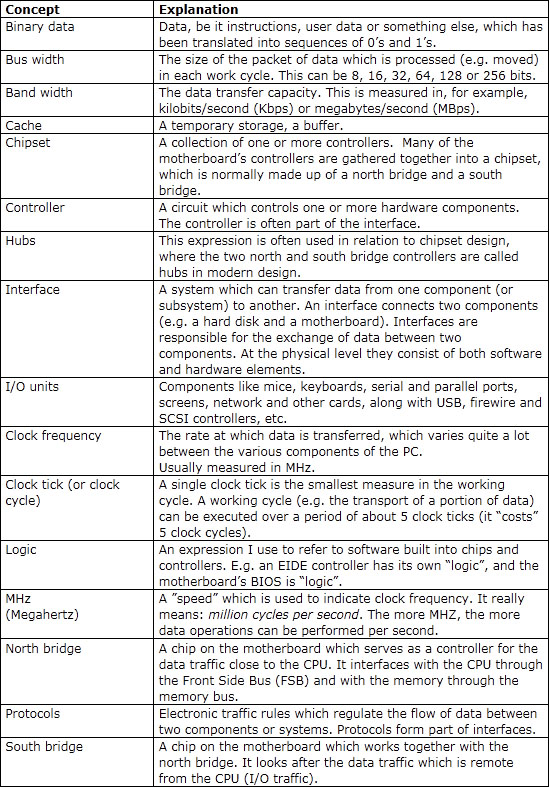

This chapter is going to introduce a number of the concepts which you have to know in order to understand the PC’s architecture. I will start with a short glossary, followed by a brief description of the components which will be the subject of the rest of this guide, and which are shown in The Von Neumann model in the year 2004.

The necessary concepts

I’m soon going to start throwing words around like: interface, controller and protocol. These aren’t arbitrary words. In order to understand the transport of data inside the PC we need to agree on various jargon terms. I have explained a handful of them below. See also the glossary in the back of the guide.

The concepts below are quite central. They will be explained in more detail later in the guide, but start by reading these brief explanations.