Theoretical Paper

- Computer Organization

- Data Structure

- Digital Electronics

- Object Oriented Programming

- Discrete Mathematics

- Graph Theory

- Operating Systems

- Software Engineering

- Computer Graphics

- Database Management System

- Operation Research

- Computer Networking

- Image Processing

- Internet Technologies

- Micro Processor

- E-Commerce & ERP

Practical Paper

Industrial Training

Morphing

this document tries to introduce the reader to a term derived from the word metamorphosis, another word for transformation: morphing.

morphing describes the action taking place when one image (in this case a digital image) gets transformed into another or, in a more technical way, morphing describes the combination of generalised image warping with cross-dissolve between image elements.

the idea is to specify a warp (warping, see chapter 3.2) that distorts the first image into the second.

by doing this effectively a viewer experiences the illusion that the photographed or computer generated subjects are transforming in a fluid, surrealistic, and often dramatic way.

the introduction gives a short overview over the development of morphing. this paper also introduces warping, which is the technique morphing is based on.

four different morphing methods, respectively related approaches are described in section 3.

2. introduction

in the early states of image metamorphosis special filmmaking techniques were used, but now and since at least one decade the morphing process is executed by computers and that in a much more convincing way.

2.1 metamorphosis without computers

in early days morphing was dominated by the usage of cheap and not very realistic photographic effects (like cross-dissolve or fading, in which one image is faded out while another is simultaneously faded in).

doing this as good as possible leads to the so-called stop-motion animation, in which the subject is progressively transformed and photographed one frame at a time. - but there are major disadvantages in connection with this process: doing stop-motion causes a lot of tedious work and visual strobing by not providing the motion blur normally associated with moving film subjects. trying to fix the above mentioned disadvantages led to the so-called go-motion. here the frame-by-frame subjects are photographed while moving. now you get a more realistic effect by having proper motion blur, but the complexity becomes even greater due to the motion hardware and additionally required skills.

2.2 computer aided metamorphosis

one great progress in morphing was the usage of computer graphics to model and render images, which transform over time.

there are two reasonable ways a computer can see objects (or work with objects) for transformations 3-dimensional and 2-dimensional. working with objects in 3-dimensional space involves a collection of polygons used for their representation. for the purpose of metamorphosis the vertices of the first object get displaced over time to coincide in position and colour of the second object (colour gets interpolated). both objects must have the same number of polygons to allow correspondence between the vertices. problems occur if the topologies of the two objects differ (e.g. one has got a hole through it).

in a lot of cases the manipulation of 2-dimensional images leads to the same results as doing so with 3-dimensional objects.

2.3 morphing

morphing is an image processing technique typically used as an animation tool for the metamorphosis from one image to another.

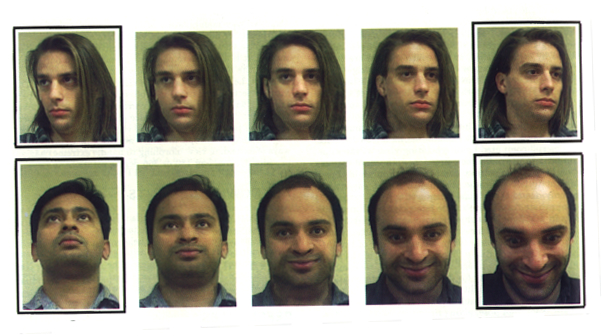

the whole metamorphosis from one image to the other consists of fading out the source image and fading in the destination image. thus, the early images in the sequence are much like the source image and the later images are more like the destination image. the middle image of the sequence is the average of the source image distorted halfway toward the destination image and the destination image distorted halfway back to the source image. this middle image is rather important for the whole morphing process. if it looks good then probably the entire animated sequence will look good. for example, when morphing between faces, the middle face often looks strikingly life-like but is neither the first nor the second person in the image.

3. tricky morphing techniques

i would like to introduce three different approaches concerning morphing.

3.1 field morphing

this morphing algorithm is basically based on fields of influence surrounding 2-dimensional control primitives.

in general there exist two different ways of image warping.

forward mapping scans through the source image pixel by pixel, and copies them to the appropriate place in the destination image. reverse mapping goes through the destination image pixel by pixel, and samples the correct pixel from the source image. the advantage of this algorithm is that every pixel of the destination image gets set to something appropriate. this deformation method will be used with field morphing.

transformation with one pair of lines

one basic concept of field morphing consists of a pair of lines, one defined relative to the source image and the other defined relative to the destination image. these lines define a co-ordinate mapping from the destination image pixel co-ordinate x to the source image pixel co-ordinate x resulting in a line pq in the destination image and p q in the source image (see figure below).

the value u is the position along the line, and v is the distance from the line. the value u goes from 0 to 1 as the pixel moves from p to q, and is less than 0 or greater than 1 outside that range. the value v is the perpendicular distance in pixels from the line. if there is just one line pair, the transformation of the image proceeds as follows:

for each pixel x in the destination imagefind the corresponding u,v

find the x in the source image for that u,v

destinationimage(x) = sourceimage(x )

x is the location to sample the source image for the pixel at x in the destination image. the location is at a distance v (the distance from the line to the pixel in the source image) from the line p q , and at a proportion u along that line.

the algorithm transforms each pixel co-ordinate by a rotation, transformation, and/or a scale, thereby transforming the whole image.

transformation with multiple pairs of lineseverybody can imagine that more than one pair of lines results in a more complex transformation. however, weighting of the co-ordinate transformations for each line has to be performed. the weight assigned to each line should be strongest when the pixel is exactly on the line, and weaker the further the pixel is from it.

in the above figure, x s the location to sample the source image for the pixel at x in the destination image. that location is a weighted average of the two pixel locations x1 and x2 , computed with respect to the first and second line pair, respectively.

the closer the pixels are to a line, the more closely they follow the motion of that line, regardless of the motion of other lines.

pixels near the lines are moved along with the lines, pixels equally far away from two lines are influenced by both of them.

morphing between two images

a morph operation blends between two images, i0 and i1. to do this, we define corresponding lines in i0 and i1. each intermediate frame i of the metamorphosis is defined by creating a new set of line segments by interpolating the lines from their positions in i0 to the positions in i1. both images i0 and i1 are distorted toward the position of the lines in i. these two resulting images are cross-dissolved throughout the metamorphosis, so that at the beginning the image is completely i0. halfway through the metamorphosis it is half i0 and half i1, and at the end it is completely i1.

there are two different ways to interpolate the lines.

the first way is to just interpolate the endpoints of each line. the second way is to interpolate the centre position and orientation of each line, and interpolate the length of each line.

advantages & disadvantages

the probably biggest advantage of this technique is that it is very impressive and the animator is free to position the lines he wants for the image to build the reference base lines during the morphing process, corresponding from the source image to the destination image.

the two biggest disadvantages of the feature-based technique are speed and control. because it is global, all line segments need to be referenced for every pixel, which can cause speed problems. in some line combinations and special transformation processes unexpected and unwanted interpolations are generated, that cause additional fixing effort.

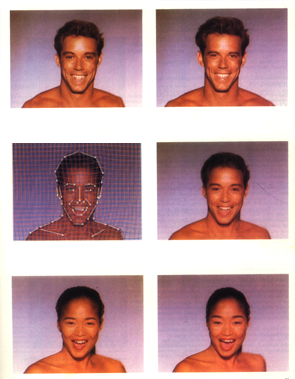

nice samples

the upper left image is the upper right source

image distorted to the intermediate position.

the lower left image is the lower right destination image distorted

toward that same position.

note that the blend (morph) between the two distorted images (middle right image)

is much more life-like than the either of the distorted images

themselves. the middle left image shows the morphed image with the interpolated lines drwan over it.

the final sequence are the images on the left side top-down viewed.

3.2 skeleton-based image warping

image warping is a geometric transformation that maps a source image onto a target image.

in image processing, warping is used to rectify distorted images, mapping nonrectangular patches onto rectangular ones.

in computer graphics, warping plays an opposite role: the target image is the 2d projection of a 3d surface onto which the source image had been mapped. hence warping is a composite mapping of the reparameterization from 2d image (texture) space to 3d object space, and the subsequent projection onto 2d screen space. consequently, rectangular patches are mapped onto nonrectangular ones. this procedure is known as texture mapping.

the algorithm described in this chapter treats an image as a collection of interior layers, which are extracted in a manner similar to peeling an onion. the aim is to map a source image, s, onto a target image, t, each of whose boundaries are arbitrary. both s and t are actually subimages, which may be extracted with any kind of segmentation method. pixels lying in the extracted subimage are designated as foreground. the remaining pixels are assigned a background value.

the mapping will effectively treat s as if it were printed on a sheet of rubber, and stretch it to take the shape of t.

three stages of the algorithm

the warping algorithm has three stages:

reparameterize s and t using a transformation function g. this yields s and t , respectively. apply a second transformation h to map (resample) s onto t . apply an inverse mapping, g-1, to convert t into t, the desired result.

reparameterization into the (u,v) parameter space

this chapter describes the above-mentioned function of the first stage that transforms images s and t into a new parameter space. this reparameterization corresponds to function g in the above figure. the algorithm decomposes a 2d image into an alternate representation consisting of layers of interior pixels. the layers are extracted in a manner like peeling an onion. this imposes a (u,v) parameterization in which u runs along the boundary, and v runs radially inward across successive interior layers.

since the mapping information is only defined along the boundary, it is reasonable to first consider the mapping of adjacent points. these points consist of the adjacent layer. this data can then propagate further until the innermost layer is assigned a correspondence (skeleton).

in a convex shape, an eroding boundary coincides with shrinking (scaling down) the boundary positions about the centroid (area around the centre).

problems can arise if scaling is applied to shapes containing concavities. the reduced boundary does not lie entirely within the larger adjacent boundary, and the centroid is no longer guaranteed to lie within the shape.

the difficulty of expressing erosion analytically for shapes containing concavities is bypassed with a discrete approximation a thinning algorithm.

thinning

thinning, together with boundary traversal, is used to erode foreground pixels along the boundary while satisfying a necessary connectivity constraint. this helps to impose the (u,v) co-ordinate system upon the s and t images.

classical thinning algorithms operate on binary raster images. they scan the image with a window, labelling all foreground pixels lying along the boundary with one of two labels, del or skl.

del is designated to set the foreground pixel as deleteable (flip to background value), if the foreground pixel is not found essential in preserving the mutual connectivity.

the skl label is applied to skeletal points, pixels, which must be kept in order to preserve neighbourhood connectivity. this pixel is said to lie on the shape s symmetric axis, or skeleton, which has the property of being fully connected.

after each thinning pass a boundary traversal follows.

boundary traversal

during the boundary traversal the boundaries are traversed while concurrently initialising the appropriate layer list (list of the appropriate image used to store the layers pixel (-colour)) and deleting those pixels labelled del.

skl pixels remain intact and are guaranteed to appear in all subsequent layers.

the starting point for such a traversal is initially chosen to be the top-leftmost boundary point or a boundary point of high curvature (this decision has a bunch of advantages). clearly the most reliable choice for a starting point is one which is known to be contained in all subsequent layers. since skeletal points fulfil this property, the first encountered skeletal point may be chosen to take on this role. all subsequent traversals will then begin from that skeletal point.

example:

|

|

0/34 | 0/33 | 0/32 | 0/31 | ||||||||

| 0/02 | 0/01 |

1/00 |

1/28 | 1/27 | 0/30 | |||||||

| 0/04 | 0/03 | 1/02 | 1/01 | 2/10 | 1/26 | 0/29 | ||||||

| 0/05 | 1/04 | 1/03 | 2/12 | 2/11 | 2/09 | 1/25 | 0/28 | |||||

| 0/06 | 1/05 | 2/14 | 2/13 | 3/12 | 3/09 | 2/08 | 1/24 | 0/27 | ||||

| 0/07 | 1/06 | 2/15 | 3/13 | 4/12 | 4/08 | 3/08 | 2/07 | 1/13 | 0/26 | |||

| 0/08 | 1/07 | 2/16 | 3/14 | 4/07 | 3/07 | 2/06 | 1/22 | 0/25 | ||||

| 0/09 | 1/08 | 2/17 | 3/06 (06) |

2/05 | 1/21 | 0/24 | ||||||

| 0/10 | 1/09 | 2/18 | 2/19 (05) |

2/04 | 1/20 | 0/23 | ||||||

| 0/11 | 1/10 | 1/11 | 2/20 (04) |

2/03 (03) |

1/19 | 0/22 | 0/21 | |||||

| 0/12 | 0/13 | 1/12 | 1/13 | 1/14 (00) |

1/18 | 0/20 | ||||||

| 0/14 | 0/15 | 1/15 | 1/16 (01) |

1/17 | 0/19 | |||||||

| 0/16 | 0/17 | 0/18 |

consider the shape given in the figure above. all foreground pixels are labled with a number (label/pos) and all skeletal pixels are highlighted with a darker background. the shape is processed in passes consisting of the following two steps each:

apply one pass of a thinning process to label all boundary points as deleteable (del) or skeletal (skl). traverse the boundary as described in "boundary traversal". this procedure serves to initialize the appropriate layer list and expose interior layers so that they may become traversed in subsequent passes.

data structure: layer-trees

the recursive subdivision of layers gives rise to a tree representation. a l-tree has the following simple properties:

each level of the tree corresponds to an interior layer of the shape, starting with the outermost layer in the root. the vertices in each level include del and first-generation skl labelled pixels. the leaves build a strip which have no descendants, the entire strip maps onto an old skl segment in the previous (upper) layer.

the internal structure of an l-tree can be encoded as shown below:

struct {

unsigned char *imgbf; /* pointer to list */

int len; /* length of list */

int *links; /* pointer to auxiliary data */

}

the (u,v) parameters

the (u,v) parameterisation is conveniently represented by l-trees where the (u,v)-co-ordinates are indices into the layer lists stored in the tree vertices.

the v value corresponds to the layer beginning at the root where v=0 and the v-co-ordinate gets incremented at each successive tree level.

the u value corresponds to the layer length (number of pixels presented in each boundary).

for the following mapping within the (u,v) parameter space an image of conventional format is required, including the pixels currently stored in the l-tree.

mapping within the (u,v) parameter space

this section describes the function h that resamples s onto t . the mapping solution in the (u,v) space is now more tractable than its counterpart in the rectilinear co-ordinate system.

first pass: normalising the v-axis

for each u resample the column of pixels along the v-axis

so that the maximum v samples are used for the corresponding radial

path (column on figure above). this forces all columns to be supersampled

at a rate dictated by the maximum v. second pass: resampling the

u-axis

this is a similar procedure as the above normalisation; now the u-axis

in s gets scaled to match the dimension of t . third pass:

resampling the v-axis

now that the (u,v) parameter spaces for s and t have

identical dimensions in the u-direction, the information in the

v-direction must be made identical as well column by column.

reparameterization from (u,v) to (x,y)

now the resampled data of s has to get reapplied onto t, corresponding to the function g-1. two steps are required (reverse procedure of "reparameterization into the (u,v) parameter space"!):

scale the appropriate intervals along the row of t to update the layers in t s l-tree (by using interpolation). traverse shape t while concurrently updating the traversed pixels with the values stored in the l-tree.

advantages & disadvantages

the algorithm described is an efficient way to perform image warping among arbitrary shapes.

in spite of not being the most effective algorithm the described approach equipped with a few extensions from the current discrete implementation to a continuous domain offers promising possibilities for increased accuracy and control.

a few examples

the following two figures show how images can be mapped onto arbitrary shapes:

the first figure shows four images. s and t are displayed in the upper left and lower left quadrants, respectively. the lower right quadrant shows s mapped onto the shape defined by the foreground pixels of t. the mapping of t onto s is shown in the upper right quadrant.

this figure shows two textures mapped to the target facial shape (the second texture is the chess board of the figure above).

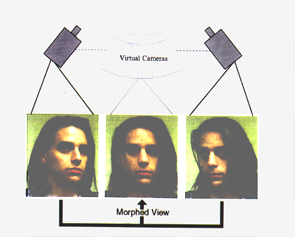

3.3 view morphing

this part of tricky morphing techniques introduces a simple but very challenging extension to image morphing that correctly handles 3d projective camera and scene transformations.

introduction

because no knowledge of 3d shape is required, the technique may be applied to photographs and drawings, as well as rendered scenes.

view morphing between two images of an object taken from two different viewpoints produces the illusion of physically moving virtual camera. the effect can be described by what you would see if you physically moved the object (or the camera) between its configurations in the two images and filmed the transition:

when morphing between different views of an object or scene, the technique produces new views of the same scene, ensuring a realistic image transition.

view morphing takes advantage of existing image morphing techniques, already in widespread use, for part of the computation. existing image morphing tools may be easily extended to produce view morphs by adding the image prewarping and postwarping steps.

view morphing works by prewarping two images, computing a morph (image warp and cross-dissolve) between the prewarped images, and then postwarping each interpolated in-between image produced by the morph. the prewarping step is performed automatically while the postwarping procedure may be interactively controlled by means of a small number of user-specified control points.

technique

computing the morph requires the following:

two images i0 and i1 representing views of the same 3d object or scene their respective projection matrices m0 and m1 a correspondence between pixels in the two images

there is no a priori knowledge of 3d-shape information needed.

when the pixel correspondence is correct, the methods described in this section guarantee shape preserving morphs.

the three stages of the algorithm are:

morphing the prewarped images moves the optical centre to cs.

postwarping transforms the image plane of the new view to its desired position and orientation.

enhancements

the technique described in the previous chapter can be extended to accommodate a range of 3d shape deformations. view morphing can be used to interpolate between images of different 3d projective transformations of the same object, generating new images of the same object, projectively transformed.

the advantage of using view morphing in this context is that salient features such as lines and conic shapes are preserved during the course of the transformation from the first image to the second.

another enhancement is to leave out the prewarp entirely in cases of considerably different objects. prewarping is less effective for morphs between different objects not closely related by a 3d projective transform. - the postwarp step should not be omitted, however, since it can be used to reduce image plane distortions for more natural morphs.

advantages & disadvantages

because no knowledge of 3d shape is required, the technique may be applied to photographs and drawings, as well as to artificially rendered scenes. this is probably the most remarkable advantage of view morphing.

since view morphing relies exclusively on image information, it is sensitive to changes in visibility. problems occur if the visibility is not constant, i.e., not all surfaces are visible in both images. a related problem that has not been solved yet is the usage of view morphing for 180° or 360° rotations in depth.

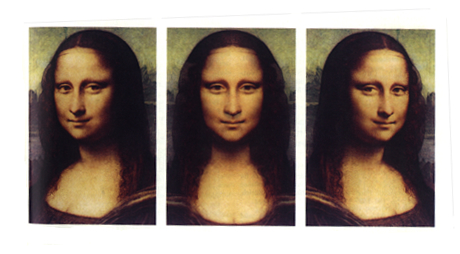

nice samples

4. conclusions

the morphing techniques introduced in this document have one thing in

common: they concentrate on a topic of computer graphics, which is one

of the most fascinating aspects of computer graphics.

the papers that this document is based on are only a subset of morphing

techniques and closely related computer graphics applications.

one of the early useful morphing techniques, skeleton-based image warping by georg wolberg, involved an interesting way to map a 2-dimensional image to an arbitrary shape.

using this morphing technique as a starting point, thaddeus beier and shawn neely developed a challenging morphing technique for transforming one digital image into another by giving the animator high-level control of the visual effect, such as natural feature-based specification and interaction.

finally, view morphing (steven m. seitz and charles r. dyer.) extended both of the techniques above to artificially create realistic in-between views of an object (or two similar objects) as 2-dimensional images taken from two different viewpoints.

i hope i succeeded in catching your attention with my computer graphic approach to the latest morphing techniques.

5. references

thaddeus beier, and shawn neely, feature-based image metamorphosisproc. siggraph 92. in computer graphics (1992) pp. 35-42.

georg wolberg, skeleton-based image warping

the visual computer 1989. pp. 95-108.